format ( frameRate, getOutFileName ()) sp. I use Shlex to split the command instead of a simple str.split(" ") because command line commands have lots of annoying edge cases where this can break, so using shlex just lets it work. The command is written and saved to a string variable, it is split into its constituent parts using the shlex library, and passed to subprocess.check_call to run it. In Python you can call command line EXEs from the program, using the subprocess library. Here is a short explanation of the command: As you must know, i specifies the input file. FFmpeg -i -origine.mp4 -vn -acodec copy audio.aac. Following the FFmpeg, the copy audio command enables you to extract only audio from your video. The last argument "out.mp4" is the name of the output file that will be created. Sometimes, you only need to copy the audio of a video.-i specifies the input files, and the string “ %03d” inside the argument pattern matches against files numbered with 3 digit numbers, and orders them by this.If you supply 48 images and set -framerate to 24, the video will last for two seconds. -framerate specifies the number of frames per second, the speed of the video.ffmpeg - framerate 24 - i "img%03d.png" "out.mp4" Once the images are created and present in the working directory, you can run FFMPEG from the command line with the required arguments. One pitfall is that numbers have to be left-padded with zeros up to the largest number of digits present, otherwise the ordering of the images gets screwed up during the video rendering process. They must be named in order, for example I used the naming scheme “img#.png”, where # is the image’s place in the sequence. This can be done repeatedly to get a sequence of images. The image is then saved to the disk as a PNG file.

Once complete, the texture is converted into a PIL image using ‘fromarray’, which happily enough is a-ok with taking a NumPy array. Rendering the image consists of literally just iterating over the array, and assigning colours to each pixel. I’m also specifying the use of 8 bit integers, as the RGB values are bounded between 0 and 255 anyway, but it’s optional. To store the image I’m using a 3 dimensional NumPy array, the first two store each pixel location, and the third is a 3-tuple for the RGB values. For this I’m using the Pillow library for Python, but you can use whatever process you want, as long as you get an ordered sequence of image files at the end of it.Ī digital image is a big grid of square pixels, each with an RGB value. The ImagesĪ video is just a sequence of images, so we first need to render each frame individually.

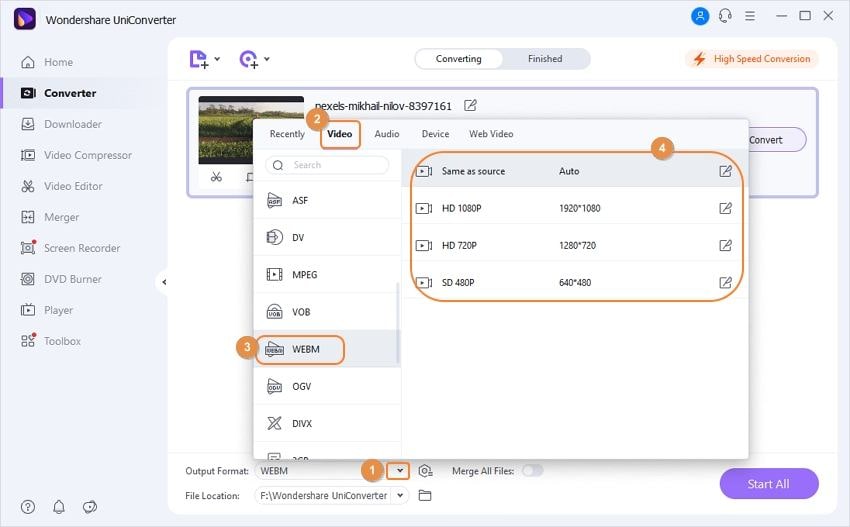

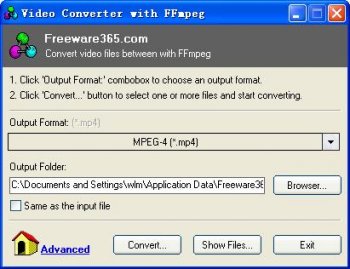

FFMPEG CONVERT IMAGE FORMAT HOW TO

If you’re on a different platform just look up how to set the PATH environment variable and you should be good. Openshot is open source, it imports quite a few formats and it has an option to export the video as png image sequence.

0 kommentar(er)

0 kommentar(er)